Proxmox Homelab Setup

Overview

I will be creating this guide, detailing how I setup my homelab. My setup uses slightly older hardware, ranging from 6th Gen to 9th Gen Intel processors. This hardware is old enough that it is relatively inexpensive, but you can find desktops from this age with decent specs such as NVMe slots. I'll go over what what I have and what I recommend to look for to buy, what you would already need in place, and then a separate guide for each step of the process. I also have a TrueNAS Scale for mass storage, which has one 10GB NIC dedicated to the SAN. This page is just a high-level overview of everything we'll be doing.

Hardware

For the Proxmox hosts and the backup server we'll want 4 desktop computers. Try to find computers that have at least one NVMe slot to use with Ceph. We also want them each to have a PCI-e 3.0 or higher, to install a network adapter capable of 10GB, and if possible try to find computers with 4 RAM slots expansion. We'll also want to buy 3 USB Ethernet adapters, for each host to use. So in total, each host will have 3 network adapters: the USB NIC for the host to use, a PCI-e NIC for the SAN, and then the onboard NIC which will be for the Virtual Machines. Lastly, we'll need all of the miscellaneous equipment. We'll want 3 NVMe drives for Ceph, 10GB sfp modules for the PCI-e adapters, fiber optic cables for the SAN, SSD drives for each host which will have the host OS, and memory for each host.

- 4 Desktop Computers (3 hosts, 1 PBS)

- 4 SSD (for the Proxmox host OS)

- 3 USB Ethernet adapters (separate NIC for the hosts to use)

- 4x 10GB PCI-e card (network adapters for the SAN network)

- SSD/NVMe drives for the SAN

- Memory

- SFP modules, Ethernet & Fiber cables

Of course, these requirements are not hard and fast. For example, we could just use dedicated SSDs for Ceph and take a performance hit. Or, forget Ceph entirely and just use an NFS share for High Availability. Also, the host could just have 2 RAM slots, it really just depends on how much memory you think you'll need. The important thing is to try to keep all of the hosts similar. For example, with Ceph we wouldn't want to have 2 hosts using NVMe drives and then the 3rd an SSD, because then the entire Ceph cluster would be limited to the speed of the SSD.

IMAGES

For the Proxmox Backup server we'll want a similar Desktop, but we ease up on these requirements a bit. We don't really need the NVMe slots, and we probably don't need more than 8-16GB of RAM, so we'll just need two slots for memory. We do want to make sure there is a PCI-e 3.0 or higher slot for our 10GB network connection. We also want is enough "connections" for our drives. This could be having 4-6 SATA ports on the motherboard, or adding some kind of expansion such as SATA PCI-e adapter. For example - in my case I'm using 2 PBS hosts. The local one has 4 SATA connections and an NVMe drive which contains the host OS. My remote PBS host does not have enough SATA ports, so I added a RAID card in IT mode which gave me plenty of connections.

Network

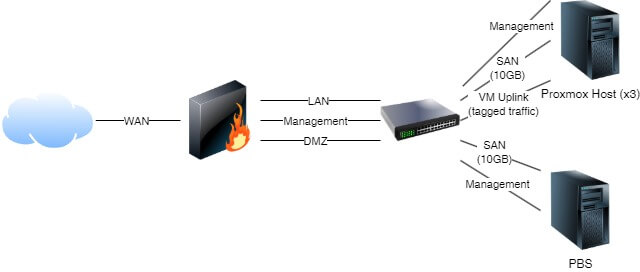

For networking equipment I use OPNsense firewall, a Brocade ICX6610 switch. Make sure on your network you have a managed switch, because we will be dividing the network up into a management network which our hosts will reside, a network which will be our SAN, and then the LAN and the DMZ networks. You'll want to have a firewall capable of routing at least 3 networks, not including WAN. The easiest way would be to have one physical interface per network. If possible, I recommend using the router-on-a-stick method over this though so that you are not limited by the amount of physical interfaces. Basically once it's all set up it should look something like this:

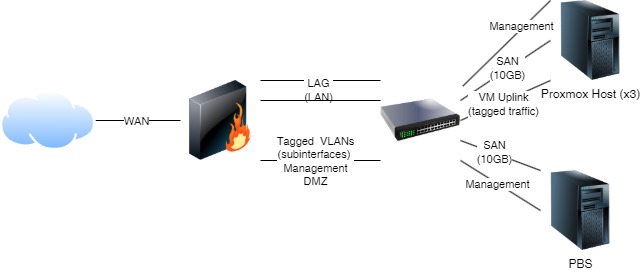

Or, here is my network using tagged traffic on one link, and then the LAN on a LAGG interface (below). If you have a firewall with only two physical interfaces, you could do something similar with passing through management, LAN, and DMZ as tagged traffic

Storage

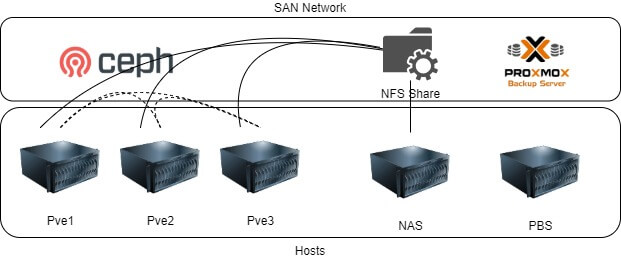

For storage, we want to have a shared storage capable of high availability. There are multiple ways to do this, each with their advantages and disadvantages: Ceph, GlusterFS, and an NFS. As of Proxmox VE 8.3 Ceph is integrated natively into the GUI to set up, so we'll be using that over GlusterFS. This will give us not only high availability, but also scalability since we can simply add more nodes to increase space. Ceph does require a steeper hardware requirement, but it is the most robust choice for shared storage. As another method of shared storage, we'll also set up an NFS share from a NAS device. This still gives us high availability to a degree, but if the NAS device goes down then obviously so will any VMs on it. This is a tradeoff, but the hardware requirements are less in that we just need another device to host the NFS server. We could even install the NFS server on the host of our Proxmox Backup Server if we wanted, to consolidate hardware. In my case I'll be using TrueNAS Scale.

Backups

For backups, we will want to have a Proxmox Backup Server on the local network, and then have another remote PBS offsite. The remote PBS will use a WireGuard installed on the host to connect back to home, and use a sync job to pull the backups from the local PBS.